Navigating Responsible AI: The Good, the Bad, and the Frameworks

The Drawbacks of a Framework-Centric Approach in Responsible AI

Is there anyone who doesn’t enjoy a good framework?

Amidst the transparent confines of open-plan offices, hot-desking, and glass panels, the concept of frameworks holds sway. Balancing the realms of strategy and execution, MIT eloquently defines frameworks as 'mental representations that order experience in ways enabling comprehension.' Not as inflexible and occasionally confounding as a policy, yet not as prescriptive as a set of processes.

In the hands of an impassionate executive, frameworks can easily turn into an execution weapon. Just as the tech industry might confidently declare ‘There's an app for that!’ to address a social issue, an annoyed employee enduring a lengthy company call may triumphantly remember a framework capable of cutting the extended conversation short.

Frameworks shine in their ability for speedy use, simplified problem-solving, and easy navigation. Conversely, the Responsible AI (RAI) movement has none of these attributes.

How to solve a problem like the responsible AI movement?

The RAI movement may as well be the chameleon of professional trends, having posed as a community of practice, a school of thought, a discipline, and even a set of practices and standards. In the analogy of wearing multiple hats, RAI has been likened to both a beanie and a Royal Ascot hat. Unfortunately, it hasn't fully grown into a fully recognised field nor industry.

The year 2023 proved particularly tumultuous for RAI, often conflated with AI governance, perceived by some as a sub-field of RAI and by others as an overarching umbrella. RAI also intersects with AI safety and AI assurance, both of which are possible future iterations of responsible AI. The distinctions between these disciplines vary across sectors, requiring a map or a pedantic intellectual for navigation.

Virginia Dignum, a distinguished AI scholar and computer scientist, provides a definition of responsible AI in Oxford’s Handbook of Digital Ethics: "RAI is about human responsibility for the development of intelligent systems along fundamental human principles and values, ensuring human flourishing and well-being in a sustainable world."

Semantics aside, another frequent complaint within the expanding realm of RAI was the perceived lack of universally accepted standards and frameworks. Lo and behold, the situation has taken a U-turn, and we've entered an era of framework overload. AI Risk Management framework, AI governance framework, Responsible AI governance framework, Responsible AI framework—all differ in nature, scope, and intended audience.

Emerging from the sea of frameworks is the recent announcement of the ISO/IEC 42001AI standard, which follows the declarations made about the EU AI Act and the US Executive Order in late 2023. The ISO standard is acclaimed as one of the milestones in global AI governance.

The appeal of frameworks for advancing responsible AI is clear, but my experience as a responsible AI evangelist implementing tools, practices, and governance makes me sceptical of relying solely on a framework-first approach. While it's a step in the right direction, placing excessive trust in frameworks may not fully address the intricate sociotechnical challenges that have fuelled responsible AI's growth.

Given the complex nature of the subject (RAI isn't exactly synonymous with precision and simplicity), it felt appropriate to unravel the complexities using a familiar Western-style division—sorting through the positive, negative, and, in this case, the prevailing consensus on frameworks.

The good - frameworks can empower responsible AI efforts by:

1) Boosting AI Governance with Added Credibility

The adoption of responsible AI efforts across industries has been rather uneven. If RAI adoption were a dance-off, only a few businesses would be attempting the robot, with the vast majority content to stick to the Macarena.

A standardised, widely recognised, auditable, and business-friendly reference point can be very helpful in addressing the slow uptake. It's the cheat code for businesses overwhelmed with navigating various IT risks and their categorisation, innovation speed, alignment of AI governance with other priorities, and the sprint to meet delivery deadlines.

Moreover, corporations have a soft spot for few things as much as they do for frameworks and the recruitment of external consultants (although not necessarily the responsible AI advocates). The impartiality and guidance from esteemed entities like Edelman, Gartner, or ISO carry significant weight, lending credibility to the field.

2) Clarifying Roles and Responsibilities

Sometimes, dealing with interdisciplinary problems like 'AI hallucination' is akin to playing a corporate game of hot potato, shuffled from one department to the next. Deciding the appointment of individuals to serve as the 'human in the loop' or establishing an AI policy grounded in the realities of data scientists are examples of broad and recent challenges that no single business department can tackle alone.

A framework can play the role of an organisational superhero, delineating clear roles and responsibilities by assigning specific issues to designated groups. An effective framework can also enhance communication by introducing a shared language across diverse groups.

For AI and RAI professionals, frameworks can offer temporary relief. They can assist in building a robust business case, expedite the development of a personalised framework, and contribute to advocacy and education efforts.

3) Streamlining Efficiency

Anyone who has ever referred to the Eisenhower matrix or Pareto matrix is likely privy to the benefits of general framework use. Frameworks can accelerate our collective decision-making, thus allowing us to respond to challenges quicker and more effectively. They can also act as a language expert, making it easy for everyone to talk the same way.

4) Tracking RAI Progress: Measuring Collective Advancement

Finally, frameworks can provide a thorough perspective on the required steps towards reaching the RAI North Star. Serving as a benchmark, they provide a clearer view of the commitment and maturity within AI governance across the field.

The bad - frameworks can hinder responsible AI efforts by:

1) Sidelining the process of ethical inquiry

‘ If ethics continues to be seen as something to implement rather than something to design organizations around, “doing ethics” may become a performance of procedure rather than an enactment of responsible values.’

Mastering ethics means confronting the tough questions. It entails acknowledging diverse interpretations of what's morally right in technology use—considering culture, geography, society, math, and personal viewpoints. For example, is it ethically superior to offer loans selectively to older customers with good credit, or should everyone be treated the same, no matter their age? And when safeguarding kids online, is it ever okay to compromise on individual privacy?

Given that AI is deeply embedded in everyday decision-making, there's a pressing need for a clear approach to address ethics, values, and moral consequences. Ethical methods within an organisation can take on wildly different shapes, moulded by individual and collective values—it's all about the context. It's more expansive than sticking to a set of decision trees within a specific framework.

2) Drowning out the diversity of perspectives

Adopting a standard framework can exacerbate groupthink. In the whirlwind world of machine learning, plenty of discriminatory practices might slip through the cracks for those not in the know about the algorithm's ins and outs or its real-world applications – or even both.

Similar to how diversity and inclusion initiatives champion a variety of voices, incorporating team members with diverse life experiences can inject fresh perspectives into ideation or auditing processes, mitigating the hazards of insular groupthink.

This is why Red Teaming, an increasingly popular method for identifying AI risks gives the green light for individuals to pinpoint system flaws without handing them a step-by-step guide.

3) Standing in the way of employee empowerment

As mentioned, a framework can do wonders in defining roles and responsibilities. But an AI governance framework is only as good as the governance behind it and people willing to do the governing.

Now, the private sector is often accused of treating sociotechnical challenges as if they're just technical puzzles to solve. The twist? The real game-changer can be social action and activism. Think Google walkouts, Cambridge Analytica whistle-blower, and tech workers staging internal protests against shady tech practices. Turns out, employees are the ones holding the moral compass.

When it comes to implementing policies or frameworks, it's not just about following the rules. It's about giving individuals a sense of personal responsibility and a voice beyond what the framework dictates. This could take the form of forums buzzing with discussions, ethical working groups brainstorming, trust and safety hackathons igniting ideas, all backed by strong sponsorships. This culture should thrive regardless of the framework in play.

4) Undermining the complexity of the challenge

Relying on a checklist or framework might accelerate the process, or it can sacrifice depth for simplicity. In such cases, the insights of an expert ethicist can be invaluable for dispelling the common misconceptions, for example:

1) Not All Businesses Follow the Same Moral North: It is true that the tech industry operates on similar principles, and corporate values may seem alike, but the levers for change vary among organisations. Different customer segments might demand more or less ethical practices, and employees could champion different causes.

2) One Size Doesn't Fit All Ethical Values: In mathematics, there are many ways to measure fairness. Similarly, and when it comes to spotting ethical risks, the devil lurks in the details. Let's take the common value of 'Transparency'—to what extent? For whom? To whom? These questions vary with the context. Sometimes, transparency for its own sake is meaningless, or clashes with another value. The reality is, arriving at the right conclusion often calls for nuanced conversations backed by a wealth of contextual knowledge.

5) Introducing implementation challenges

The challenge lies in correctly employing the frameworks. For truly comprehensive frameworks, one might need a legion of auditors and compliance support. Yet, when it comes to risk-management frameworks, overlooking an obvious grey area isn't an option. It's a bit of a domino effect—frameworks give rise to more frameworks. But contextualising and applying them demands specialised knowledge and effort, which isn't always readily available.

The frameworks - can a consensus be reached:

At the time of writing, there are more than 80 identified frameworks promoting responsible and trustworthy AI. Not only have the frameworks taken on a life of their own, but they have also gone meta. There is now a framework for the selection of frameworks.

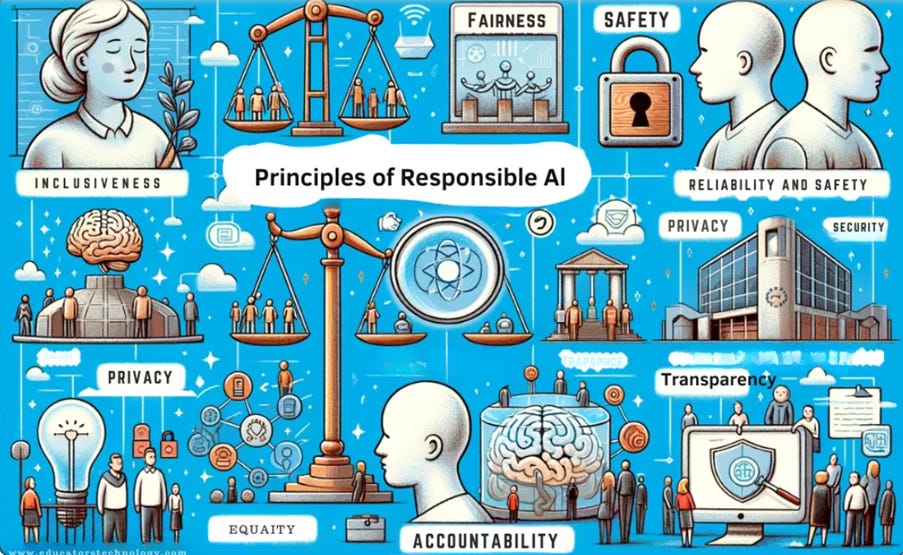

The trio of influential frameworks likely consists of NIST, the EU AI Act, and now ISO. Notably, recent research indicates a global convergence around five ethical principles: transparency, justice and fairness, non-maleficence, responsibility, and privacy.

However, the proof is in the pudding. Currently, there's no consensus on the best approach to operationalise these principles or even interpret them. As national regulations progress, and with the hope that some gain enforcement power, the AI regulation landscape may undergo significant changes.

In a nutshell, the verdict on frameworks suggests a mixed bag of results. Yet, can we trust corporate ethics alone to truly stand against irresponsible AI practices? Not exactly. Lately, there's an emerging murmur in the responsible AI community, fearing it might be overshadowed by the broader realm of AI assurance.

AI governance frameworks are pivotal in responsible AI efforts, yet they mustn't steal the entire spotlight. Let's envision a different course for the future—one where responsible AI initiatives gain momentum. In this scenario, responsible tech practices are becoming commonplace, with AI ethics rightfully claiming a seat beside the luminaries of AI innovation, safety, governance, and assurance.

I am placing my bet on the optimal future for responsible AI, which involves regulatory support, resilient AI governance, and a diverse array of ethical AI initiatives.